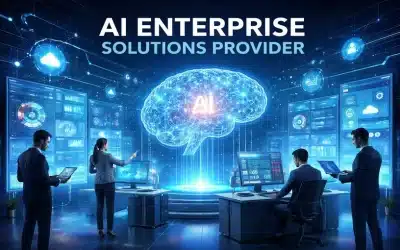

Global Report cautions against losing control over use of AI

A frantic race by leading tech giants to develop ever more powerful Artificial Intelligence (AI) could have “harmful” effects in humans. Yoshua Bengio – A leading researcher, computer scientist and 2018 winner of the prestigious Turing Prize warned Thursday as scientists marked the start of the Paris Global Summit on the technology.

Bengio pointed to AI risks that are already widely acknowledged, such as its use to create fake or misleading content online. But the University of Montreal professor added that “proof is steadily appearing of additional risks, like biological attacks or cyberattacks”. In the longer term, he fears a possible “loss of control” by humans over AI systems, potentially motivated by “their own will to survive”.

In addition, the report presented at the Paris Global Summit on AI technology highlights several key risks associated with the current trajectory of AI development. Some of the risks include:

-

Loss of human control: As AI systems become more autonomous, there is a growing concern that humans may lose the ability to effectively oversee and manage these technologies.

-

Social and economic impacts: The integration of AI into various sectors could lead to significant disruptions including job displacement and economic inequalities.

-

Ethical and safety concerns: The deployment of AI without adequate safeguards may result in ethical dilemmas and safety issues particularly if AI systems act in unpredictable ways.

In response to these challenges, the report advocates for a balanced approach that fosters innovation while ensuring safety. It calls for international collaboration to establish governance frameworks that can effectively manage the development and deployment of AI technologies.

The Paris Global Summit on AI serves as a platform for experts, policymakers, and industry leaders to discuss these pressing issues and work towards solutions that harness the benefits of AI while mitigating its risks.

The emergence last month of the low-cost, high-performance Chinese AI model DeepSeek had “sped up the race, which isn’t good for safety”, Bengio added. He called for heavier international regulation and more extensive research on AI safety, which for now accounts for “a tiny fraction” of the massive investments being made in the AI sector.

“Without government intervention, I don’t know how we’re going to get through this,” Bengio said.

Still the world’s leading AI nation for now, the United States has dropped former president Joe Biden’s attempt to impose some rules on the development since the inauguration of Donald Trump. When OpenAI’s ChatGPT burst onto the public arena two years ago, “I felt the urgency of thinking about this question of safety,” Bengio said as he presented the report — designed to match now-familiar documents from the Intergovernmental Panel on Climate Change (IPCC).

“What scares me the most is the possibility that humanity could disappear within 10 years. It’s terrifying. I don’t know why more people don’t realise it,” he said. Around 100 experts from 30 countries, the United Nations, the European Union and the OECD contributed to the Bengio-led report, published at the end of January. The work was kicked off at a previous summit on AI safety in Britain in November 2023.

Credits: barrons.com | Author: Felix Orina

GIS training courses catalogue: Here ++ | E-mail address: [email protected] | WhatsApp No: +254 719 672 296